TOON Benchmarks

Token-Oriented Object Notation (TOON) is a new format that has been proposed as “a good way to pass structured data to Large Language Models with significantly reduced token usage.”

It is (fairly) token-efficient. But do LLMs understand it as well as better-known formats?

We ran some tests to try to find out.

Test 1: Understanding of Tabular Data

We recently tested how well an LLM (GPT-4.1 nano) understood table data in a variety of different formats.

In this test, we added TOON to the comparison.

Here’s what we found…

Good Accuracy for the Token Costs

Looking at accuracy vs. token costs, TOON was amongst the strongest performers at the token-efficient end of the spectrum.

Lower Accuracy Than With More Token-Hungry Formats

Accuracy with TOON wasn’t as good as with either our slightly less token-efficient ‘markdown table’ format or, unsurprisingly, with more token-hungry formats including markdown-kv, XML, YAML, HTML and JSON.

The difference in accuracy that we saw between using TOON and using the even more token-efficient CSV format wasn’t statistically significant.

(See our original comparison article for details of our methodology.)| Format | Accuracy | 95% Confidence Interval | Tokens |

|---|---|---|---|

| Markdown-KV | 60.7% | 57.6% – 63.7% | 52,104 |

| XML | 56.0% | 52.9% – 59.0% | 76,114 |

| INI | 55.7% | 52.6% – 58.8% | 48,100 |

| YAML | 54.7% | 51.6% – 57.8% | 55,395 |

| HTML | 53.6% | 50.5% – 56.7% | 75,204 |

| JSON | 52.3% | 49.2% – 55.4% | 66,396 |

| Markdown-Table | 51.9% | 48.8% – 55.0% | 25,140 |

| Natural-Language | 49.6% | 46.5% – 52.7% | 43,411 |

| TOON | 47.5% | 44.4% – 50.6% | 21,518 |

| JSONL | 45.0% | 41.9% – 48.1% | 54,407 |

| CSV | 44.3% | 41.2% – 47.4% | 19,524 |

| Pipe-Delimited | 41.1% | 38.1% – 44.2% | 43,098 |

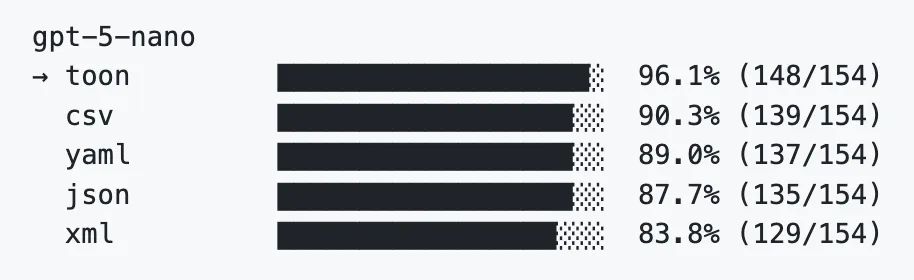

Test 2: Understanding of Nested Data

| Format | Accuracy | 95% CI | Tokens |

|---|---|---|---|

| YAML | 62.1% | [59.1%, 65.1%] | 42,477 |

| Markdown | 54.3% | [51.2%, 57.4%] | 38,357 |

| JSON | 50.3% | [47.2%, 53.4%] | 57,933 |

| XML | 44.4% | [41.3%, 47.5%] | 68,804 |

| TOON | 43.1% | [40.0%, 46.2%] | 45,436 |

In our test of LLM understanding of nested data, this time using GPT-5 nano, TOON performed worse than the other formats we tested, including YAML and markdown that both also used fewer tokens.

Other Observations

Interestingly, the data retrieval benchmarks shared in the TOON GitHub repository showed TOON performing significantly better than other formats with GPT-5 nano, on a seemingly similar kind of test to the ones we ran:

We have run those tests ourselves and found similar results.

We’ve also reviewed the code for the tests and it looks good to us.

Conclusions

We like the idea of designing a format with LLM token efficiency specifically in mind.

It’s unclear at this stage how well LLMs can retrieve information from data provided to them in the TOON format.

On the one hand, in our tests, we failed to find circumstances where TOON was the best-performing format. (And in our test of retrieval from nested data, it performed relatively poorly.)

On the other, in the tests provided in the TOON GitHub repo, TOON performed well.

Let us know if you run any tests on TOON yourself. We’d be interested in your findings.

Enjoyed This Article?

Get more tactical AI agent insights delivered to your inbox

We respect your privacy. Unsubscribe at any time.